Data warehouses such as BigQuery are the central powerhouse for data teams when it comes to building fast, reliable, and even complex analytical reports and dashboards . As more teams migrate to Google Analytics GA4 properties, a question that comes up is how to get that data into a data warehouse so that analysis can begin. This blog is going to explore three methodologies for exporting data from Google Analytics (GA) into BigQuery (BQ), and the pros and cons of each approach.

Table of Contents

Option 1: Link BigQuery to GA

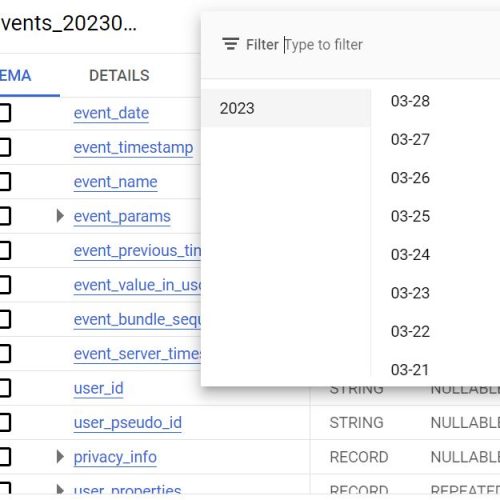

One of the primary benefits for selecting BigQuery as your data warehouse if you heavily use GA data is the fact that they are both part of the Google platform. In your property settings, you can add a link that will generate an export of GA data into BQ. This feature while previously available just to those on 360 is now available on basic GA4 properties. This link will automatically export GA4 data either via a stream (continuously) or as a daily export. The amount of data exported is capped at 1 million events per day. The data arrives in a “raw” event format and is stored in partitioned tables. You will need to use SQL to query the data and generate reports.

Pros

- Setup is quick and primarily involves enabling APIs and give the service account access.

- The export is managed by Google, i.e. no real pipeline maintenance is needed.

- Having the data in arrive in a raw format, provides great flexibility for the type of reporting that can be done. You are no longer restricted to the metrics only available in GA. For example, you can generate any x-day active user report. Additionally, you are able to enrich the dataset using data from other sources such as your CRM for more complex analysis.

Cons

- The primary con with the BQ Link is if you are not on GA 360, data is not backfilled. This means that you should configure this link as soon as your property is ready.

- Due to the fact that you’re dealing with raw event data, you will find that you need to write complex queries for relatively simple reports you can run via the API. This also means that you will need to be proficient in SQL. Not only that, there is still limited documentation on writing these queries to duplicate common reports.

- If you have deployed a daily export data versus a stream, data is going to be delayed about a day which limits real time reporting.

Option 2. Managed ETL Tools

The second option is to use a managed ETL Tool such as Fivetran, which supports a GA4 data connector. Configuration is relatively straight-forward especially with a helpful UI and you can also use the GA4 Query Explorer to verify the report generated based on the combination of fields you have selected.

Pros

- The insertion and updating of the data is fully managed by the ETL tool.

- Reports are built in a ready to use format. You may need to perform basic manipulations later, however you don’t necessarily need to have expert level SQL skills.

- ETL tools support various warehouse destinations such as Snowflake, not just BigQuery.

Cons

- You are limited to the fields and field configuration combinations the ETL tool supports. For example, at the time of this article, the Fivetran GA4 connector only supported the date dimension, not week or month.

- ETL tools charge based on monthly active rows, which means it can get expensive depending on how much data your reports are configured to bring in.

Option 3. GA4 Data API

The third option is using the Google Analytics Data API GA4 to generate reports which you can then import into a Bigquery table or various other destinations.

Below is sample code that generates a monthly first user source report and exports the data as a CSV.

import csv

from google.analytics.data_v1beta import BetaAnalyticsDataClient

from google.analytics.data_v1beta.types import (

DateRange,

Dimension,

Metric,

RunReportRequest,

)

property_id = 12345678 # replace with your GA4 property ID

# Using a default constructor instructs the client to use the credentials

# specified in GOOGLE_APPLICATION_CREDENTIALS environment variable.

# Ensure that this is set.

client = BetaAnalyticsDataClient()

request = RunReportRequest(

property=f"properties/{property_id}",

dimensions=[

Dimension(name="year"),

Dimension(name="month"),

Dimension(name="firstUserSource")

],

metrics=[Metric(name="activeUsers"),

Metric(name="totalUsers"),

Metric(name="conversions"),

Metric(name="eventCount"),

Metric(name="sessions"),

Metric(name="engagedSessions"),

],

date_ranges=[DateRange(start_date="2022-06-01", end_date="2023-12-31")],

)

response = client.run_report(request)

header = []

for dim in response.dimension_headers:

header.append(dim.name)

for met in response.metric_headers:

header.append(met.name)

output = []

output.append(header)

for row in response.rows:

rn = []

for dim_val in row.dimension_values:

rn.append(dim_val.value)

for met_val in row.metric_values:

rn.append(met_val.value)

output.append(rn)

file_name = "monthly_first_user_source.csv"

with open(file_name, "w", newline='', encoding="utf-8") as f:

writer = csv.writer(f)

writer.writerows(output)

Pros

- The API provides a flexible option for generating any valid combination of reports using all fields exposed via the API.

- This option also provides control over how and where the data is written, and also at what frequency.

- Depending on how much data you want to export, this option can be much cheaper compared to a managed ETL tool.

Cons

- You will need to be proficient in at least one of the coding languages supported.

- There is additional legwork required when it comes to deploying and maintaining an ETL solution that updates a table on a schedule.

- API quotas also need to be taken into consideration.

Need help with your UA to GA4 migration or GA4 data ingestion into a data warehouse? Reach out to hello@moderndata.co